Ranger Paimon插件

简介

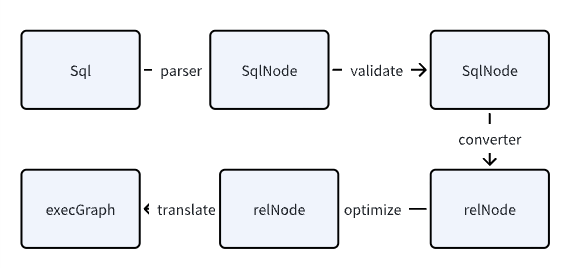

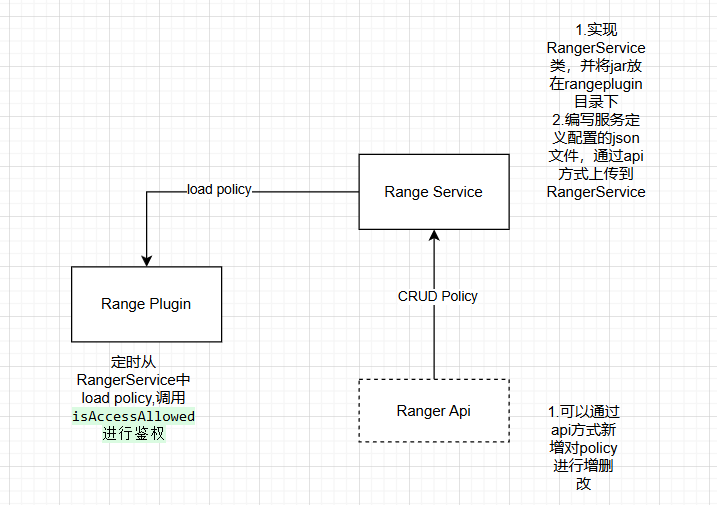

Apache Ranger为Hadoop体系提供了同意的安全体系,包括访问权限控制和统一的审计(记录谁访问Ranger进行权限设置或者校验等操作)。如果想要开发一个Ranger的插件主要三个部分:

Ranger服务端:需要定义一个服务类型JSON文件上传给Ranger Service,以及实现一个RangerBaseService类作为Ranger服务的资源查找,或者配置检验的jar包放到Ranger服务的range-plugins/

Ranger鉴权的插件:根据Ranger提供的接口实现一个鉴权的插件,这个插件会定时从Ranger-Service端将权限同步到本地,需要鉴权的服务可以用对应的接口来进行权限校验。很多服务例如doris,hive是将这个插件集成到了他们的服务中,当然也可以拿出来单独使用,例如自己解析Sql语句拿到用户以及对应表时在调用插件接口进行鉴权。

Ranger授权api:这一部分可以通过Ranger提供的UI手动进行添加,ranger也提供了api,用户可以通过api进行新增权限,或者删除更改权限等。权限这块Ranger内称之为RangerPolicy策略。

Ranger服务端

实现RangerBaseService类

public class RangerServicePaimon extends RangerBaseService {

@Override

public Map<String, Object> validateConfig() {

return new HashMap<>();

}

@Override

public List<String> lookupResource(ResourceLookupContext resourceLookupContext) throws Exception {

return new ArrayList<>();

}

}此类有两个方法,都是用于在Ranger Service UI上做资源查询,或者配置检验时用的,可以不做实现,直接返回空也没问题。之后需要将实现的jar包放入range-plugins/

服务定义描述文件

{

"name": "paimon",

"displayName": "Paimon",

//对应jar包的service实现类

"implClass": "com.jiduauto.ranger.service.paimon.RangerServicePaimon",

"label": "Paimon",

"description": "Paimon",

//需要进行权限检验的资源

"resources": [

{

"itemId": 1,

"name": "catalog",

"type": "string",

"level": 10,

"parent": "",

"mandatory": true,

"isValidLeaf": true,

"lookupSupported": true,

"recursiveSupported": false,

"excludesSupported": true,

"matcher": "org.apache.ranger.plugin.resourcematcher.RangerDefaultResourceMatcher",

"matcherOptions": {

"wildCard": true,

"ignoreCase": true

},

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"accessTypeRestrictions": [

"create",

"show",

"alter",

"drop"

],

"label": "Paimon Catalog",

"description": "Paimon Catalog"

},

{

"itemId": 2,

"name": "database",

"type": "string",

"level": 20,

"parent": "catalog",

"mandatory": true,

"isValidLeaf": true,

"lookupSupported": true,

"recursiveSupported": false,

"excludesSupported": true,

"matcher": "org.apache.ranger.plugin.resourcematcher.RangerDefaultResourceMatcher",

"matcherOptions": {

"wildCard": true,

"ignoreCase": true

},

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"accessTypeRestrictions": [

"create",

"show",

"alter",

"drop"

],

"label": "Paimon Database",

"description": "Paimon Database"

},

{

"itemId": 3,

"name": "table",

"type": "string",

"level": 30,

"parent": "database",

"mandatory": true,

"isValidLeaf": true,

"lookupSupported": true,

"recursiveSupported": false,

"excludesSupported": true,

"matcher": "org.apache.ranger.plugin.resourcematcher.RangerDefaultResourceMatcher",

"matcherOptions": {

"wildCard": true,

"ignoreCase": true

},

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"accessTypeRestrictions": [

"create",

"show",

"alter",

"drop",

"insert",

"select"

],

"label": "Paimon Table",

"description": "Paimon Table"

},

{

"itemId": 4,

"name": "column",

"type": "string",

"level": 40,

"parent": "table",

"mandatory": true,

"lookupSupported": true,

"recursiveSupported": false,

"excludesSupported": true,

"matcher": "org.apache.ranger.plugin.resourcematcher.RangerDefaultResourceMatcher",

"matcherOptions": {

"wildCard": true,

"ignoreCase": true

},

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"accessTypeRestrictions": [

"select"

],

"label": "Paimon Column",

"description": "Paimon Column"

}

],//需要进行校验的访问类型

"accessTypes": [

{

"itemId": 1,

"name": "show",

"label": "Show"

},

{

"itemId": 2,

"name": "insert",

"label": "Insert"

},

{

"itemId": 3,

"name": "alter",

"label": "Alter"

},

{

"itemId": 4,

"name": "create",

"label": "Create"

},

{

"itemId": 5,

"name": "drop",

"label": "Drop"

},

{

"itemId": 6,

"name": "select",

"label": "Select"

},

{

"itemId": 7,

"name": "all",

"label": "All",

"impliedGrants":

[

"select",

"insert",

"create",

"drop",

"alter",

"show"

]

}

],

// ranger serviceUI上需要填写的配置,RangerBaseService的validateConfig方法就是对这些配置进行校验

"configs": [

{

"itemId": 1,

"name": "username",

"type": "string",

"mandatory": true,

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"label": "Username"

},

{

"itemId": 2,

"name": "password",

"type": "password",

"mandatory": false,

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"label": "Password"

},

{

"itemId": 3,

"name": "jdbc.driver_class",

"type": "string",

"mandatory": true,

"validationRegEx": "",

"validationMessage": "",

"uiHint": "",

"defaultValue": "com.mysql.cj.jdbc.Driver"

},

{

"itemId": 4,

"name": "jdbc.url",

"type": "string",

"mandatory": true,

"defaultValue": "",

"validationRegEx": "",

"validationMessage": "",

"uiHint": ""

}

],

"enums": [

],

"contextEnrichers": [

],

"policyConditions":

[

],//对数据某些字段进行脱敏使用

"dataMaskDef": {

"accessTypes": [

{

"name": "select"

}

],

"resources": [

{

"name": "catalog",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"uiHint":"{ \"singleValue\":true }"

},

{

"name": "database",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"uiHint":"{ \"singleValue\":true }"

},

{

"name": "table",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"uiHint":"{ \"singleValue\":true }"

},

{

"name": "column",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"uiHint":"{ \"singleValue\":true }"

}

],//脱敏的函数

"maskTypes": [

{

"itemId": 1,

"name": "MASK",

"label": "Redact",

"description": "Replace lowercase with 'x', uppercase with 'X', digits with '0'",

"transformer": "mask({col})",

"dataMaskOptions": {

}

},

{

"itemId": 2,

"name": "MASK_SHOW_LAST_4",

"label": "Partial mask: show last 4",

"description": "Show last 4 characters; replace rest with 'x'",

"transformer": "mask_show_last_n({col}, 4, 'x', 'x', 'x', -1, '1')"

},

{

"itemId": 3,

"name": "MASK_SHOW_FIRST_4",

"label": "Partial mask: show first 4",

"description": "Show first 4 characters; replace rest with 'x'",

"transformer": "mask_show_first_n({col}, 4, 'x', 'x', 'x', -1, '1')"

},

{

"itemId": 4,

"name": "MASK_HASH",

"label": "Hash",

"description": "Hash the value",

"transformer": "mask_hash({col})"

},

{

"itemId": 5,

"name": "MASK_NULL",

"label": "Nullify",

"description": "Replace with NULL"

},

{

"itemId": 6,

"name": "MASK_NONE",

"label": "Unmasked (retain original value)",

"description": "No masking"

},

{

"itemId": 12,

"name": "MASK_DATE_SHOW_YEAR",

"label": "Date: show only year",

"description": "Date: show only year",

"transformer": "mask({col}, 'x', 'x', 'x', -1, '1', 1, 0, -1)"

},

{

"itemId": 13,

"name": "CUSTOM",

"label": "Custom",

"description": "Custom"

}

]

},//对数据行级过滤

"rowFilterDef": {

"accessTypes": [

{

"name": "select"

}

],

"resources": [

{

"name": "catalog",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"mandatory": true,

"uiHint": "{ \"singleValue\":true }"

},

{

"name": "database",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"mandatory": true,

"uiHint": "{ \"singleValue\":true }"

},

{

"name": "table",

"matcherOptions": {

"wildCard": "true"

},

"lookupSupported": true,

"mandatory": true,

"uiHint": "{ \"singleValue\":true }"

}

]

}

}说白了就是定义一些资源,以及对这些资源进行校验的访问类型。例如table资源支持的访问类型是"create","show", "alter","drop","insert","select"。

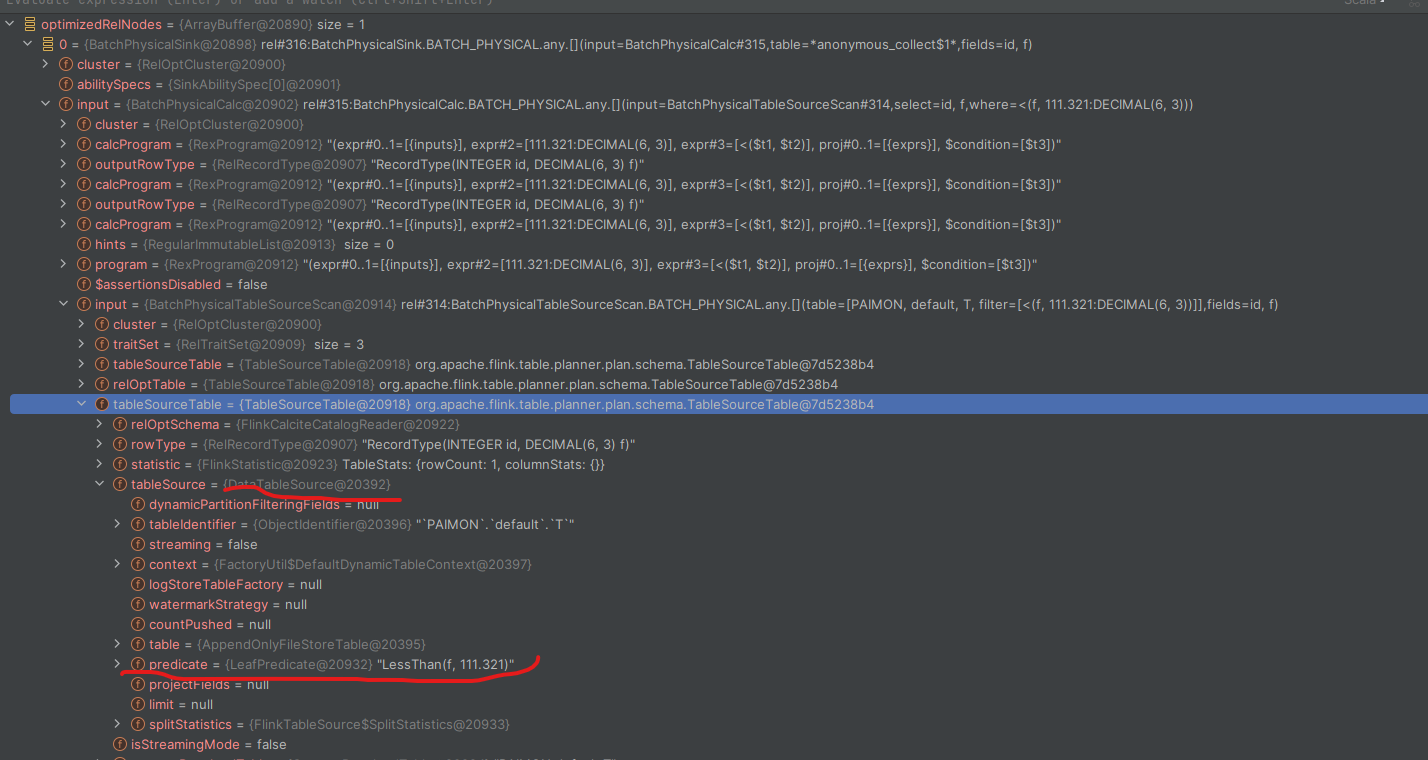

这里简单说一下dataMaskDef,例如用户查询时想对某些字段进行脱密,他的查询sql是:SELECT NAME,PHONE FROM USER;对phone字段想做脱敏的话可以将查询修改为:SELECT NAME,CAST(mask(PHONE) AS STRING) FROM USER。实现这个功能就需要平台侧拿到sql之后对sql进行解析得到表的字段,之后访问Ranger判断这个字段需不需要进行datamask,需要的或就将其转换成对应的函数。

https://juejin.cn/post/7231858374827933753这篇文章讲的更细致一些,可以参考。

注意:range只是用做记录哪些表的字段需要做datamask,具体解析sql之类的需要平台自己去做,可以将Ranger当做一个记录了权限相关信息的数据库来看。

Ranger Plugin

rangerPlugin会定时从service load相关policy到本地做鉴权。需要定义三个xml文件。需要将以下三个文件放在resources文件下,或者放在java启动时classpath下:

ranger-paimon-dev-audit.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

</configuration>

ranger-paimon-dev-policymgr-ssl.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration xmlns:xi="http://www.w3.org/2001/XInclude">

<!-- The following properties are used for 2-way SSL client server validation -->

<property>

<name>xasecure.policymgr.clientssl.keystore</name>

<value>hadoopdev-clientcert.jks</value>

<description>

Java Keystore files

</description>

</property>

<property>

<name>xasecure.policymgr.clientssl.truststore</name>

<value>cacerts-xasecure.jks</value>

<description>

java truststore file

</description>

</property>

<!--路径自己指定一个-->

<property>

<name>xasecure.policymgr.clientssl.keystore.credential.file</name>

<value>jceks://file/User/xxxx/work/keystore-hadoopdev-ssl.jceks</value>

</property>

<!--路径自己指定一个-->

<property>

<name>xasecure.policymgr.clientssl.truststore.credential.file</name>

<value>jceks://file/User/xxx/work/truststore-hadoopdev-ssl.jceks</value>

</property>

</configuration>

ranger-paimon-dev-security.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration xmlns:xi="http://www.w3.org/2001/XInclude">

<!--此处填写测试环境创建的ranger service name-->

<property>

<name>ranger.plugin.paimon-dev.service.name</name>

<value>paimonrt</value>

</property>

<property>

<name>ranger.plugin.paimon-dev.policy.source.impl</name>

<value>org.apache.ranger.admin.client.RangerAdminRESTClient</value>

</property>

<!--此处填写测试环境的ranger admin url 不要写ip 如果有kerberos认证-->

<property>

<name>ranger.plugin.paimon-dev.policy.rest.url</name>

<value>xxxxxx</value>

</property>

<property>

<name>ranger.plugin.paimon-dev.policy.pollIntervalMs</name>

<value>30000</value>

<description>

How often to poll for changes in policies?

</description>

</property>

<property>

<name>ranger.plugin.paimon-dev.policy.rest.ssl.config.file</name>

<value>ranger-paimon-policymgr-ssl.xml</value>

<description>

Path to the file containing SSL details to contact Ranger Admin

</description>

</property>

<!--cache路径自己指定一个-->

<property>

<name>ranger.plugin.paimon-dev.policy.cache.dir</name>

<value>/Users/xxxx/work/cache</value>

<description>

Directory where Ranger policies are cached after successful retrieval from the source

</description>

</property>

</configuration>

实现Plugin以及做验证,此处checkPermission()方法更详细的实现可以看,贴出的代码只是做个示例。

https://github.com/apache/ranger/compare/master...herefree:ranger:support-paimon-ranger

public class RangerPaimonPlugin extends RangerBasePlugin {

public RangerPaimonPlugin(String serviceType) {

super(serviceType, null, null);

super.init();

}

public RangerPaimonPlugin(String serviceType, String serviceName) {

super(serviceType, serviceName, null);

super.init();

}

}

public boolean checkPermission(AccessType accessType, PrivilegedEntity entity, UserGroupInformation ugi) {

RangerPaimonPlugin plugin = new RangerPaimonPlugin('xxxx');

RangerAccessRequestImpl request = new RangerAccessRequestImpl();

RangerResourceImpl resource = new RangerResourceImpl();

resource.setValue("queue", entity.getName());

request.setResource(resource);

request.setAccessType(getRangerAccessType(accessType));

request.setUser(ugi.getShortUserName());

request.setUserGroups(Sets.newHashSet(ugi.getGroupNames()));

request.setAccessTime(new Date());

request.setClientIPAddress(getRemoteIp());

RangerAccessResult result = plugin.isAccessAllowed(request);

return result == null ? false : result.getIsAllowed();

}Ranger Api

用户可以使用Api方式来对policy进行增删改查,当前也可以在rangerServiceUI上进行操作,这里记录下如何使用api方式创建policy。ranger官方api文档https://cwiki.apache.org/confluence/display/RANGER/Ranger+Client+Libraries

public class PaimonPolicyManager {

private RangerClient rangerClient;

private String policyName;

private String RANGER_SERVICE_NAME;

public void createPolicy() {

//先通过api查找是否存在对应的policy

Map<String, String> filter = new HashMap<>();

filter.put("policyName", policyName);

filter.put("serviceName", RANGER_SERVICE_NAME);

List<RangerPolicy> policies = rangerClient.findPolicies(filter);

if (policies.isEmpty()) {

//不存在就创建新的policy

rangerClient.createPolicy(creatPolicy("group", policyName, "db", "tb", Collections.singletonList("select")));

}else{

//存在的话就更新,在原有的policy上新增一个Policyitem(例如原来有select权限,后面在新增个drop权限)

List<String> updatePermission = new ArrayList<>();

if(checkPolicy(policies.get(0),"group","select")) {

RangerPolicy rangerPolicy = addPolicyIterm(policies.get(0), "group", Collections.singletonList("select"));

rangerClient.updatePolicy(RANGER_SERVICE_NAME,policyName,rangerPolicy);

}

}

}

public boolean checkPolicy(RangerPolicy rangerPolicy, String group, String permissionOp) {

for (RangerPolicy.RangerPolicyItem policyItem : rangerPolicy.getPolicyItems()) {

List<String> groups = policyItem.getGroups();

if (!groups.contains(group)) {

continue;

}

for (RangerPolicy.RangerPolicyItemAccess access : policyItem.getAccesses()) {

if (access.getType().equals(permissionOp)) {

return true;

}

}

}

return false;

}

public RangerPolicy createPolicy(String group, String policyName, String dbname, String tbName, List<String> permissionOpsList) {

RangerPolicy rangerPolicy = new RangerPolicy();

rangerPolicy.setService(RANGER_SERVICE_NAME);

rangerPolicy.setName(policyName);

rangerPolicy.setResources(creatResource(dbname,tbName));

List<RangerPolicy.RangerPolicyItem> rangerPolicyItemList = new ArrayList<>();

for(String op:permissionOpsList) {

RangerPolicy.RangerPolicyItem rangerPolicyItem = creatRangerPolicyItem(group, op);

rangerPolicyItemList.add(rangerPolicyItem);

}

rangerPolicy.setPolicyItems(rangerPolicyItemList);

return rangerPolicy;

}

public static Map<String, RangerPolicy.RangerPolicyResource> createResource(String dbName, String tbName) {

Map<String, RangerPolicy.RangerPolicyResource> resourceMap = new HashMap<>();

resourceMap.put("catalog", new RangerPolicy.RangerPolicyResource("paimon"));

resourceMap.put("database", new RangerPolicy.RangerPolicyResource(dbName));

resourceMap.put("table", new RangerPolicy.RangerPolicyResource(tbName));

return resourceMap;

}

public RangerPolicy.RangerPolicyItem createRangerPolicyItem(String group, String permission) {

RangerPolicy.RangerPolicyItem rangerPolicyItem = new RangerPolicy.RangerPolicyItem();

rangerPolicyItem.setGroups(Collections.singletonList(group));

RangerPolicy.RangerPolicyItemAccess rangerPolicyItemAccess = new RangerPolicy.RangerPolicyItemAccess();

rangerPolicyItemAccess.setType(permission);

rangerPolicyItemAccess.setIsAllowed(true);

rangerPolicyItem.setAccesses(Collections.singletonList(rangerPolicyItemAccess));

return rangerPolicyItem;

}

private RangerPolicy addPolicyIterm(RangerPolicy rangerPolicy, String group, List<String> permissionOpList) {

List<RangerPolicy.RangerPolicyItem> addRangerPolicyItemList = new ArrayList<>();

for (String permissionOp : permissionOpList) {

addRangerPolicyItemList.add(createRangerPolicyItem(group, permissionOp));

}

List<RangerPolicy.RangerPolicyItem> policyItems = rangerPolicy.getPolicyItems();

policyItems.addAll(addRangerPolicyItemList);

return rangerPolicy;

}

}